As the dust settles on Generative AI’s record-breaking debut, the lawsuits and investigations have begun, the academic studies are trickling in and many PR professionals are still asking themselves: Should I be using AI to get my work done?

As the dust settles on Generative AI’s record-breaking debut, the lawsuits and investigations have begun, the academic studies are trickling in and many PR professionals are still asking themselves: Should I be using AI to get my work done?

The answer is complex, and picking a path forward has never been trickier, but before you put your brand’s reputation in the hands of a machine, you’ll need a policy and approach to safeguard your team and head-off the biggest risks. Read on for watch-outs and tips for a successful rollout.

The biggest watch-outs

One of the biggest risks of using AI is that there's no guarantee of accuracy. AI can return false information and inaccurate conclusions. It has “hallucinations” and is not a reliable source of information. One attorney found this out the hard way in court. He had used ChatGPT to write a brief, and the tool cited cases that didn’t exist.

Even when accurate, AI pulls inspiration from existing information so the result rarely sounds original and can often be quite generic. As marketers and communicators, we crave differentiation, and don’t want to sound like anyone else. By definition, that’s what generative AI has been trained to do.

And then there’s confidentiality. Those in communications and marketing are often given access to privileged information, before it is made public. To get the best results from a generative AI platform you will need to prompt it carefully, and with detailed guidance. Doing this with pre-release material could open up a can of worms that breach all sorts of confidences.

As the technology scales and evolves, so will the risks. For now, here are a few areas to watch out for:

- Copyright infringement: As a user, it’s hard to assess whether AI infringes on existing copyright, because in many cases you simply don’t know what was trained on, or how much of its source content is being repeated verbatim. This is especially true of image generation. There are a few models which have been trained exclusively on licensed or owned content - most notably from Adobe - and for the sake of safety and caution, you should limit your usage to those.

- Ownership transference: Just because you’ve provided generative AI tools with an idea, you may not own the results. Users must understand the terms of service, particularly when it comes to rights, licensing and ownership. It’s currently impossible to protect content generated solely by AI.

- Plagiarism: Just like you can’t easily confirm or deny accuracy, plagiarism is difficult to determine.

- Discrimination and bias: AI can inherently be trained in a way that is non-inclusive and biased toward specific populations. Bias can manifest in written and visual content and is incredibly hard to detect when viewing a single output in isolation. An analysis of over 5,000 AI-generated images by Bloomberg shows bias much better at scale, and demonstrates how important it is that teams using these tools apply critical thinking to AI interpretations of their prompts.

- Security and privacy: Unless otherwise noted, no information shared with an AI tool is secure or private. If an employee feeds the technology a brand's content, private information could unintentionally become open source and public. It may also be used to train the same algorithms that a competitor later uses to their advantage.

- Indemnification: Agencies like Highwire routinely indemnify their clients against loss or damage resulting from their work. Depending on the terms and conditions of the AI tool being used to produce your work, that may not always be possible. Moreover, some AI platforms indemnify themselves against any negative results their software has when deployed by a user. Follow the trail, and - if the robots go wrong - it’s important you know where the blame will ultimately rest.

Ways to reduce risk and rollout generative AI tools

The best way to reduce risk and realize the benefits of generative AI tools is to balance experimentation and discovery — of new approaches, efficiencies and team empowerment — with organizational risk management, legal exposure and industry best practices.

Consider using AI tools as a platform for idea generation to begin. At the same time, understand that your messaging, owned content and earned media will now be crawled, cross-referenced, summarized and reinterpreted in new and unexpected ways - try feeding your own content in, and working with publicly-available content first.

Educate your teams to be good stewards of your brand: Safety, confidentiality, tone and quality

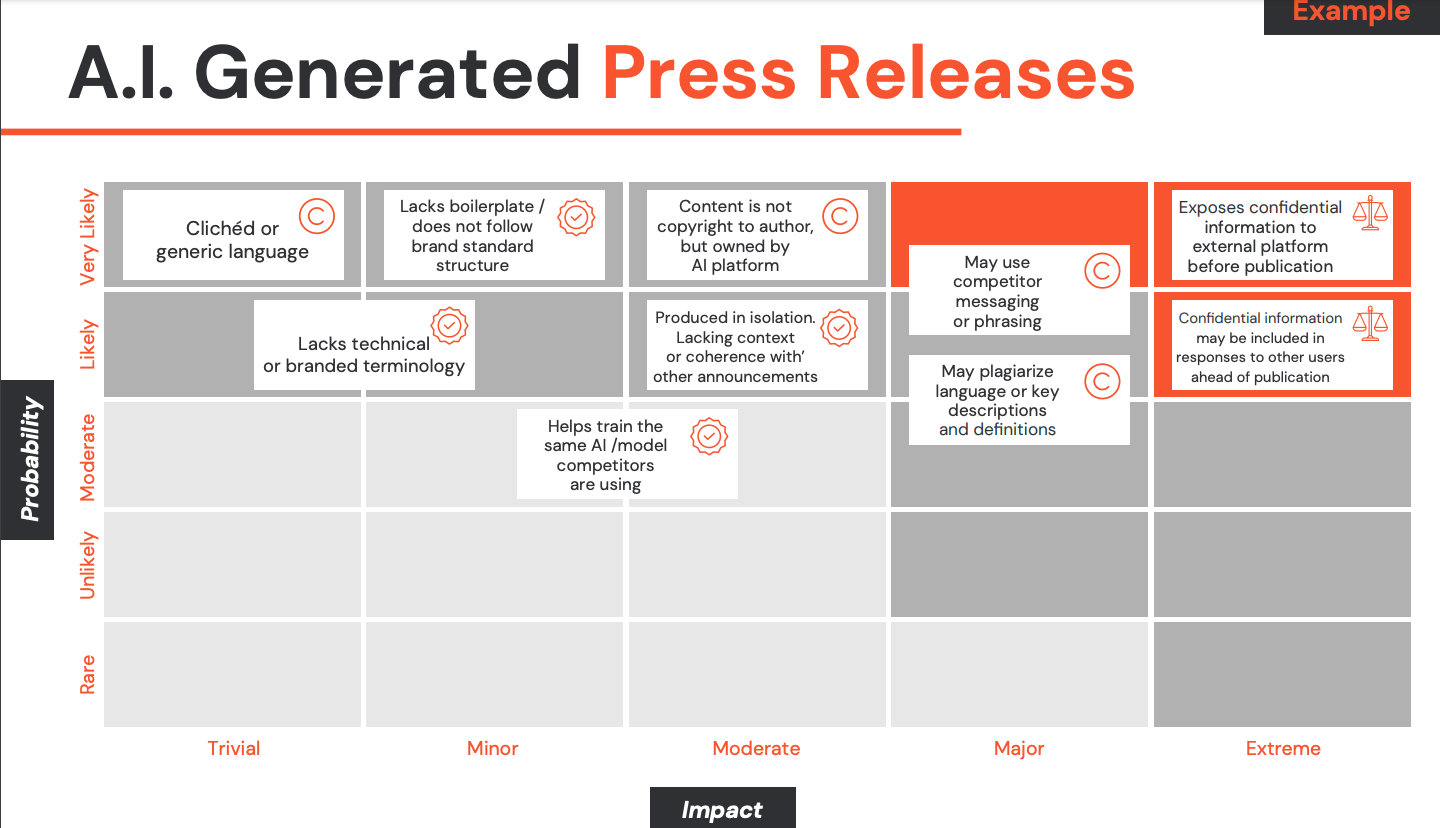

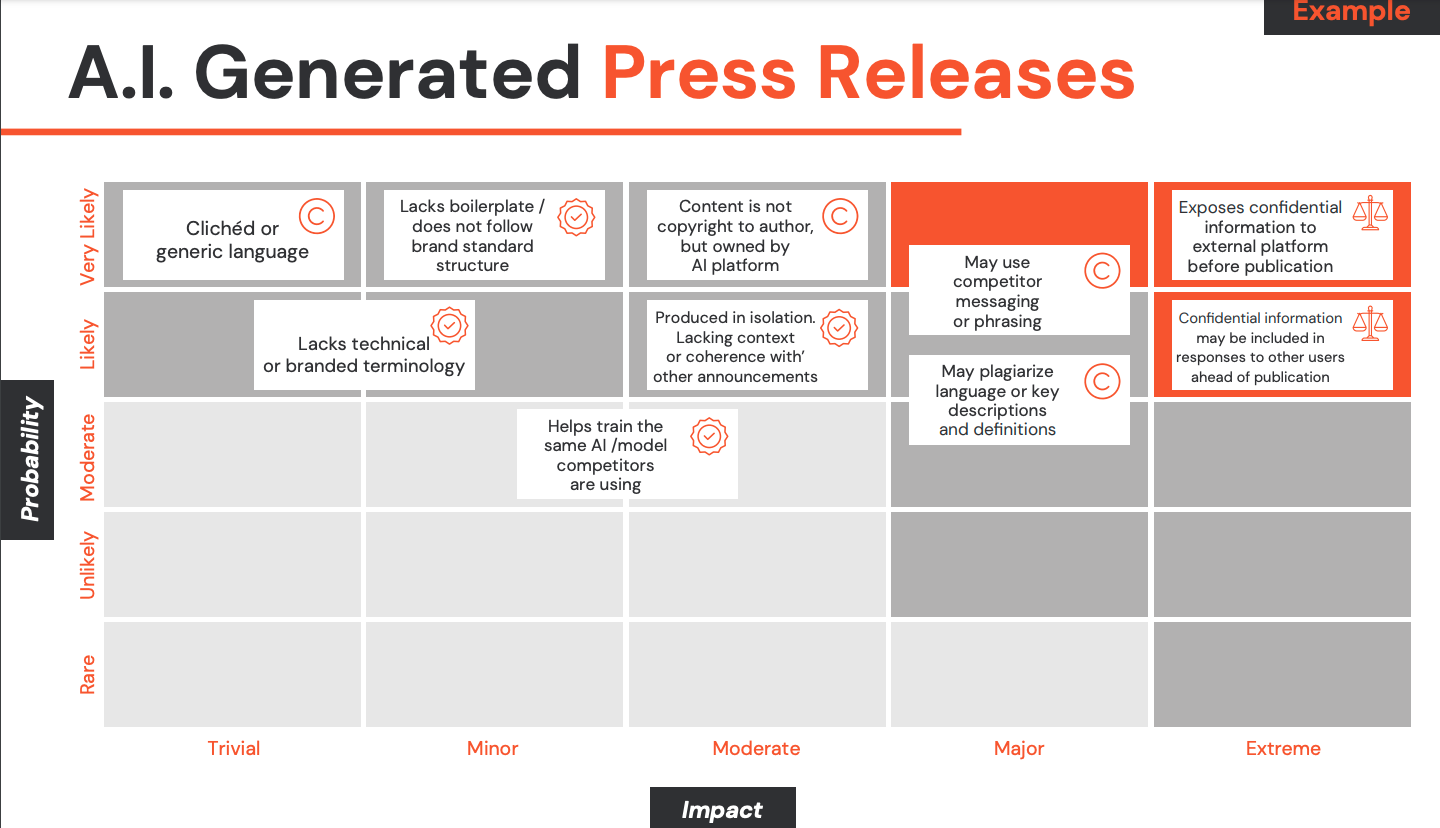

You can read more about how Highwire does this with our own Generative AI Framework, work to establish your own ‘hot button’ areas and use-cases, and map them using our AI Risk Maps to get a better understanding of what is, and is not, appropriate for your organization.

Counsel your teams on the situations in which it’s best to avoid AI, so they understand areas that are safer, allow more freedom or less risk.

Above all, firmly stick to marketing/comms best practices of truth, accuracy and consistency.

There’s no turning back, but don’t turn your back on AI

People in all industries have a lot of questions about how AI platforms will affect their roles, but perhaps none more than folks in communications, marketing, creative and content. The AI bandwagon seems to be overflowing with offers of lightning-fast, ultra-cheap marketing wins, powered by ChatGPT. But while those offers sound great, they’re rarely well-considered.

If you’re wondering, is my job secure? The answer is yes - but the nature of your job may change. Data from Freelancer.com suggests that demand for human-generated creativity is booming in the face of AI. But the genie is out of the bottle, and there's no turning back.

OpenAI, Microsoft, Google and many more enterprise platforms are bringing AI to employees, whether we approve of its use or not. It is essential that organizations understand the risks, map their own policies of acceptable use, and adapt, before they are adapted.

We realize that some of our clients may also wonder: Am I paying human rates for machine work? To be clear, Highwire considers it our duty to use AI with care and attention to client relationships, clarity around expectation and an honest discussion of value. We will never deploy Generative AI on behalf of a client without explicit permission, written into our contracts and scopes. Although the prospect of doing so is already prompting discussions about the efficiency gains we can offer, and whether we are being good stewards of budget if a client chooses not to use any form of Generative AI.

Whatever your personal risk tolerance, we recommend working with an agency that has clear policies around the use of Generative AI, is open and transparent about how they apply to your account, is constantly educating its staff about this fast-evolving technology and has clear guidance that you can rely on, practically, ethically and legally.

We’re happy to share more about our own approach, and you can read more about our Generative AI Framework here.